About Me

I am a data scientist at Verily, currently working on the development of digital biomarkers for precision psychiatry. My research integrates interactive systems, sensing technologies, and machine learning algorithms to gain deeper insights into human behavior. I’m particularly focused on the innovative use of wearable sensor data and machine learning techniques to advance mental health assessment and enable more personalized care.

Before joining Verily, I worked on mobile sensing for mental health with Tanzeem Choudhury. I received my Ph.D. in information science from Cornell University. During my Ph.D., I collaborated with several industry researchers on a variety of research projects, including developing binary neural networks at Nokia Bell Labs, building a conversational system that improves workplace productivity and wellbeing at FX Palo Alto Laboratory, and developing digital biomarkers of mental fatigue at Google Research.

Past Projects

Assessing Inhibitory Control in the Wild

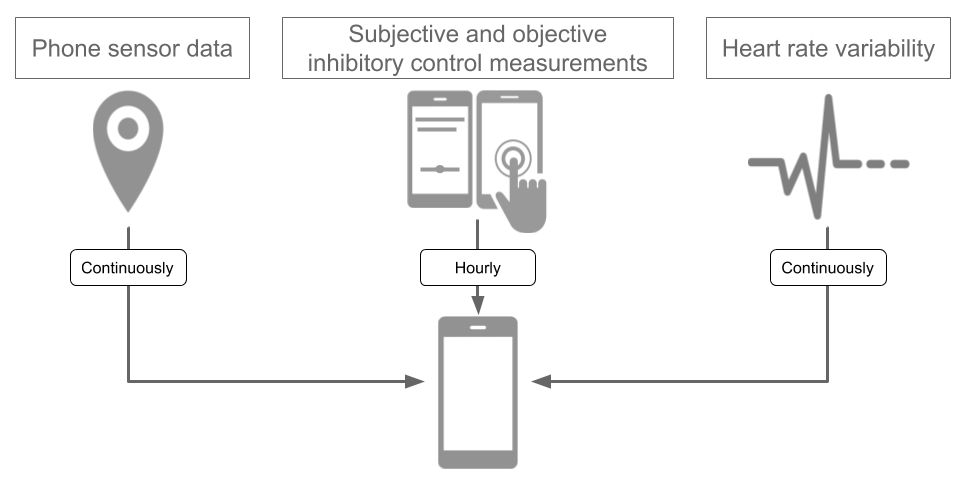

Inhibitory control is one of the core executive functions and contributes to our attention, performance, and physical and mental well-being. Being able to continuously and unobtrusively assess our inhibitory control and understand the mediating factors allows us to design intelligent systems that help manage our inhibitory control and well-being. To this end, We developed InhibiSense, an app that passively collects a user’s behavior, ground truths of inhibitory control measured with stop-signal tasks (SSTs) and ecological momentary assessments (EMAs), and heart rate information transmitted from a wearable heart rate monitor. We conducted a 4-week in-the-wild study and used generalized estimating equation (GEE) and gradient boosting tree models fitted with features extracted from the participants’ phone use and sensor data to predict their inhibitory control and identify the digital markers.

The results suggest that the top digital markers that are positively associated with an individual’s SSRT include phone use burstiness, the mean duration between 2 consecutive phone use sessions, the change rate of battery level when the phone was not charged, and the frequency of incoming calls. The top digital markers that are negatively associated with SSRT include the standard deviation of acceleration, the frequency of short phone use sessions, the mean duration of incoming calls, the mean decibel level of ambient noise, and the percentage of time in which the phone was connected to the internet through a mobile network. However, no significant correlation between the participants’ objective and subjective measurement of inhibitory control was found. Please see our paper for more details.

Overcoming Distractions during Transitions from Break to Work

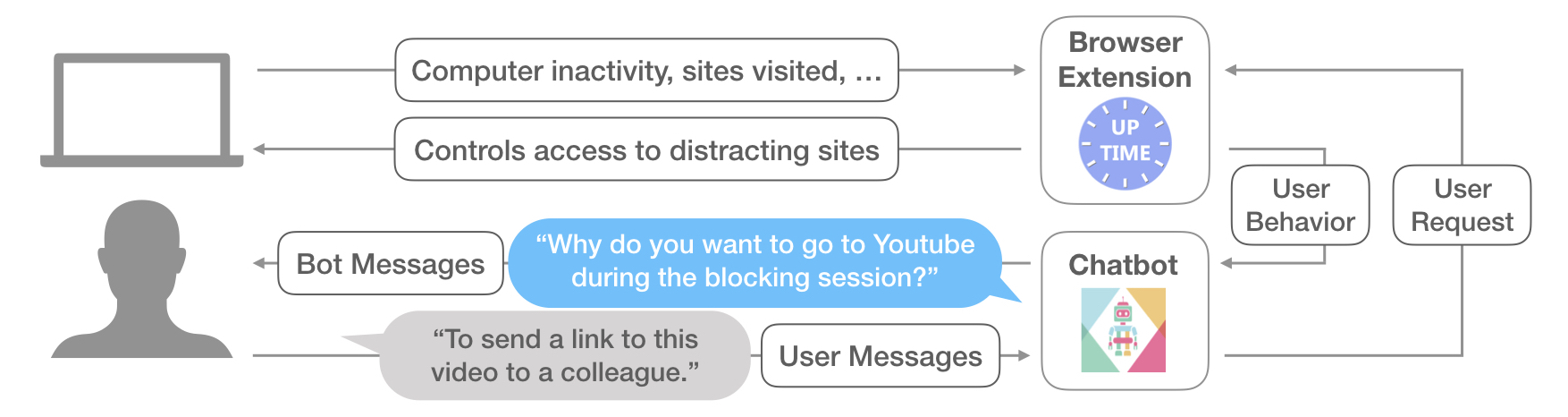

Transition from break to work is the moment when people are susceptible to digital distractions. To support workers' transitions, we developed a conversational system that senses the transitions and automatically blocks distracting websites temporarily, which helps user avoid distractions while still gives them control to take necessary digital breaks. UpTime system entails two major components, a Chrome extension and a Slack chatbot. The browser extension is used to collect information about user’s computer inactivity, sites visited, CPU usage, etc, and controls the access to distracting sites. The chatbot communicates with the browser extension in the background, interacts with user, and “negotiates” with user when user attempts to access distracting sites.

We conducted a 3-week in-situ study with 15 participants in a corporate, comparing UpTime with baseline behavior and with a state-of-the-art system. The results from our quantitative data showed that the participants were significantly less likely to visit a distracting site after returning from a break. The survey data showed that the participants had significantly lower level of perceived stress due to internal coercion when using UpTime. And there was no significant difference in participants’ sense of control among the three conditions. The findings suggest that automatic, temporary blocking at transition points can significantly reduce digital distractions and stress without sacrificing workers’ sense of control. Please see our paper for more details.

Using Behavioral Rhythms and Multi-task Learning to Predict Fine Grained Symptoms of Schizophrenia

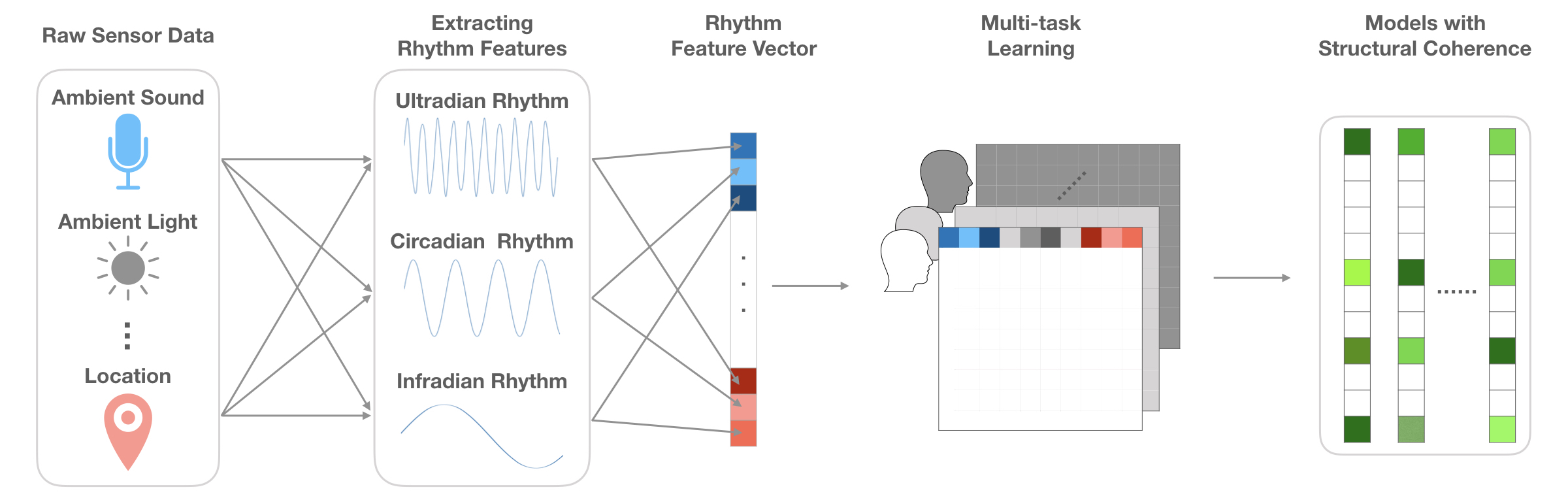

Schizophrenia is a severe and complex psychiatric disorder with heterogeneous and dynamic multi-dimensional symptoms. Early intervention is the most effective approach to preventing the symptoms from deteriorating. We aimed to use passive mobile sensing data to build machine learning models that can predict fine-grained symptoms of schizophrenia and provide interpretable results, which clinicians can make clinical decisions upon. We first extracted a variety of rhythm features that correspond to patients’ ultradian, circadian, and infradian rhythm. Then we trained prediction models using multi-task learning, which enables structural coherence across different patients’ models while accounts for individual differences.

The results suggest that models trained with multi-task learning can achieve better prediction accuracy compared to models trained with single-task learning. Moreover, the results provide insights into the relationship between rhythms and the trajectory of different symptoms. For example, the ultradian rhythm of ambient sound is associated with symptoms of hallucination, such as seeing things and hearing voices, while the ultradian rhythm of patients’ text messaging pattern is associated with their thinking clearly and feeling social. The findings shed light on designing intervention tools that can trigger interventions based on changes in patients’ rhythms. Please see our paper for more details.

Deterministic Binary Filters for Convolutional Neural Networks

Neural network architectures have achieved breakthrough performance on various applications, such as image and speech recognition. However, these models tend to have more parameters and therefore require more memory space to store the parameters, which makes it hard for these models to be deployed on embedded systems or IoT platforms that have limited memory resource. Recent research has been focusing on approaches that can reduce models’ on-device memory footprint while still maintain models’ performance, network compression techniques and novel layer architectural designs for instance.

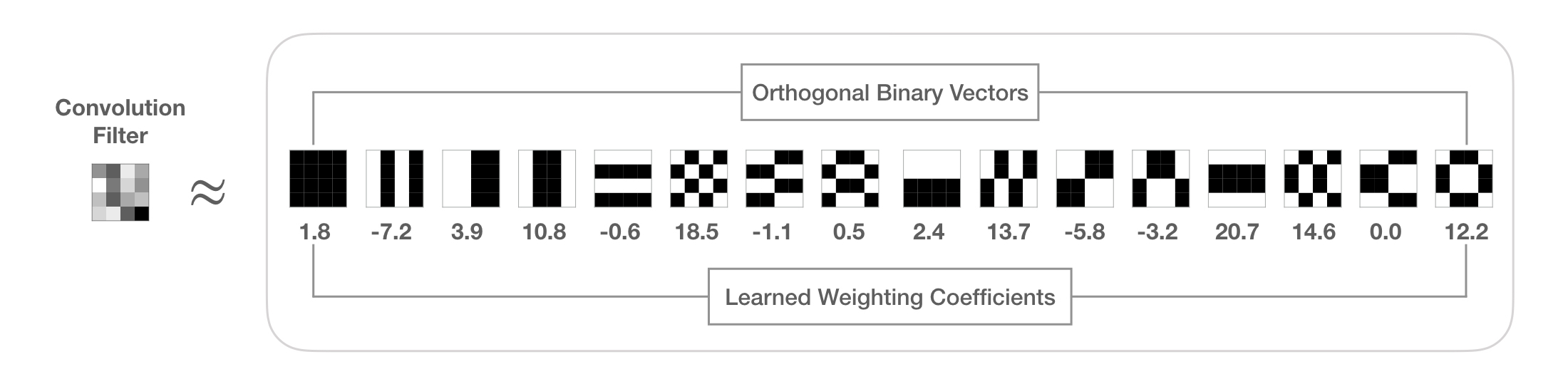

We proposed Deterministic Binary Filters (DBF), a new approach that learns the weighting coefficients of predefined orthogonal binary bases instead of learning convolution filters directly. Essentially, each filter in a convolution network is a linear combination of orthogonal binary vectors that can be generated using orthogonal variable spreading factor (OVSF). This not only reduces the memory footprint but also allows efficient runtime on embedded devices. Moreover, the amount of memory reudction is tunable based on the desired level of accuracy.

Our DBF technique can be integrated into well-known architectures, such as ResNet and SqueezeNet, which reduces the model size by 75% with only 2% of accuracy loss when evaluated on CIFAR-10 dataset. In addition, we designed a new architecture called DBFNet using DBF, which is aimed to perform more challenging classification tasks on datasets like ImageNet. DBFNet achieves 75% of memory reduction with 40.5% top-1 accuracy and 65.7% top-5 accuracy on ImageNet. The results suggest that convolution filters comprised of weighted combination of orthogonal binary bases can offer significantly reduction in the number of parameters with comparable levels of accuracy, which provides insights for future filter design. Please see our paper for more details.